Sunday 30 July 2017

Selecting A Compression Algorithm For rr

rr's traces are large. Memory-mapped files account for a lot of that, but the most efficient way to handle them is "zero copy" file cloning so we don't want to compress them during recording. Most of the rest is recordings of data copied into tracee address spaces by the kernel, and plus snapshots of registers, and these data are extremely compressible — often containing long runs of zeroes, for example. For a long time rr has used zlib to compress the non-mapped-file trace data, and zlib's 'deflate' algorithm often achieves compression of 8x or more.

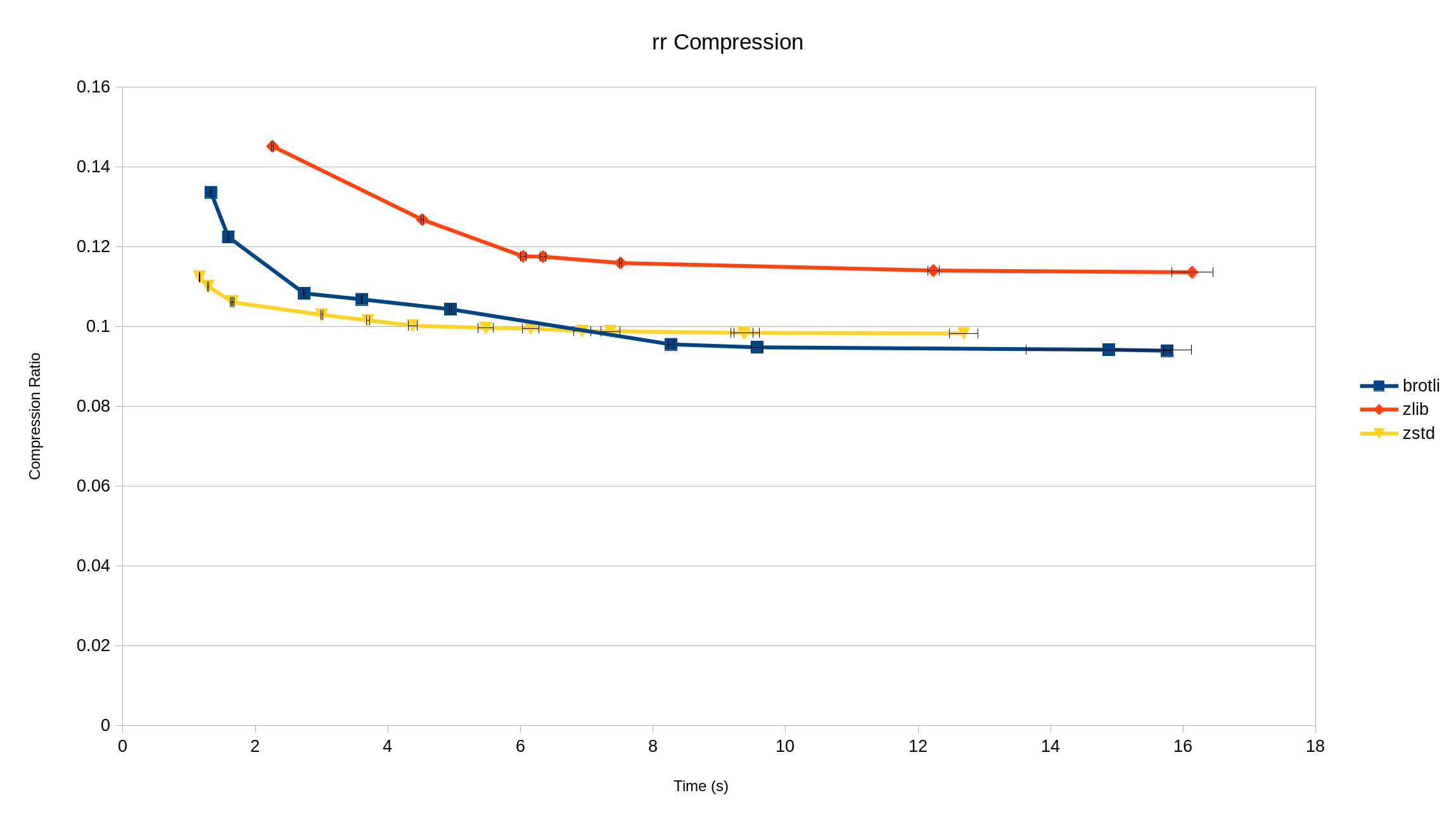

Of course zlib is pretty old and significantly better algorithms exist, so now seems like a good time to reevaluate that decision. I used the Squash framework to compare zlib to two contenders, brotli and zstd, on actual rr trace data: a single, fairly short Firefox run, 828MB uncompressed, treated as independent 1MB chunks because that's what rr does. Here are the results:

I've omitted compression levels that took more than 20 seconds to compress the data. Currently rr uses zlib level 6, which takes just over 12 seconds to compress the data. Data compression occurs in parallel with the rest of recording, and uses multiple cores when it needs to, so in practice is seldom a performance bottleneck.

On this data, both brotli and zstd beat zlib by a significant margin, so we're definitely leaving some performance on the table by sticking with zlib. In particular, given the same time budget, zstd can shave 14% off the size of the non-mapped-file trace data, and brotli can shave off 17%. Alternatively, for the same trace size we could use much less CPU time — zstd level 1 compresses slightly better than zlib level 6, at 10x the speed!

For rr I think brotli level 5 is an obvious choice. For some reason there's a sudden improvement in compression at level 5, where it passes zstd and reaches roughly its optimal compression given a reasonable time budget. At level 5 we're shaving 17% off the current size and also taking 32% off the CPU time.

Apart from the performance, brotli also has a better licensing story. zstd has Facebook's standard patent license, which terminates if you sue Facebook for any patent infringement, and some organisations aren't comfortable with that. Apparently people have done patent searches and haven't found any Facebook patents covering zstd, but that is not wholly reassuring (while also being mystifying — if they're not applying for relevant patents, why not make that clear?). On the other hand, Google has made a clear commitment to license brotli royalty-free with no such conditions. Of course there could be third-party patents, but if they became a problem it would be easy to switch rr's algorithm (especially compared to the trouble they would cause for Web sites and browsers!).

Of course there are lots of other compression algorithms I could evaluate, but I guess if there are any further gains to be had, they would be very minor.

Update Unfortunately Ubuntu doesn't have a brotli library package. (Fedora does.) So, using brotli would mean everyone building rr on Ubuntu has to build brotli themselves first, or we vendor brotli into rr (or we do something truly insane like have rr pull and build brotli at build time if necessary). None of these approaches are appealing :-(. I guess there's also "rewrite rr in Rust so we can use cargo to have reasonable dependency management", which is appealing but not currently practical.

I'm leaning towards vendoring brotli into rr.

Comments